BIOS IT Blog

Introducing the New NVIDIA Blackwell: A Technical Breakdown

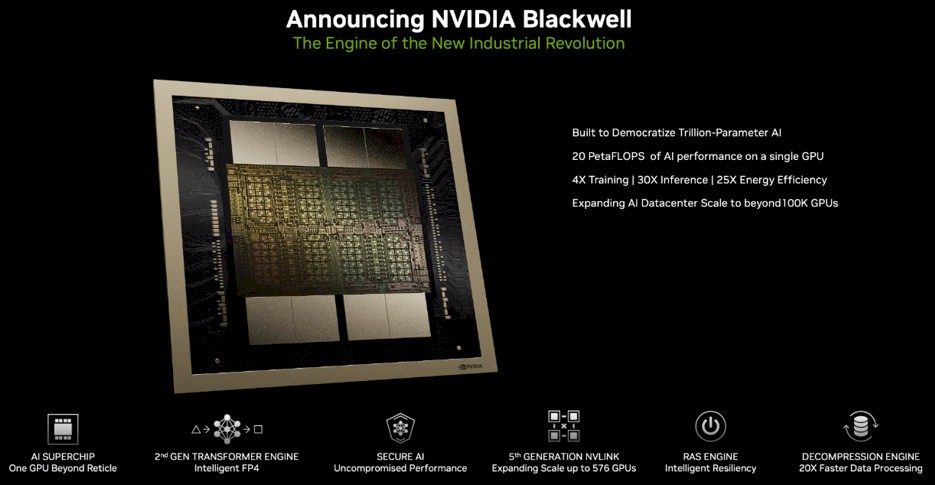

NVIDIA unveiled the Blackwell architecture at this year’s GTC 2024. This innovation introduces six revolutionary technologies that collectively enable AI training and real-time LLM inference for models scaling up to 10 trillion parameters.

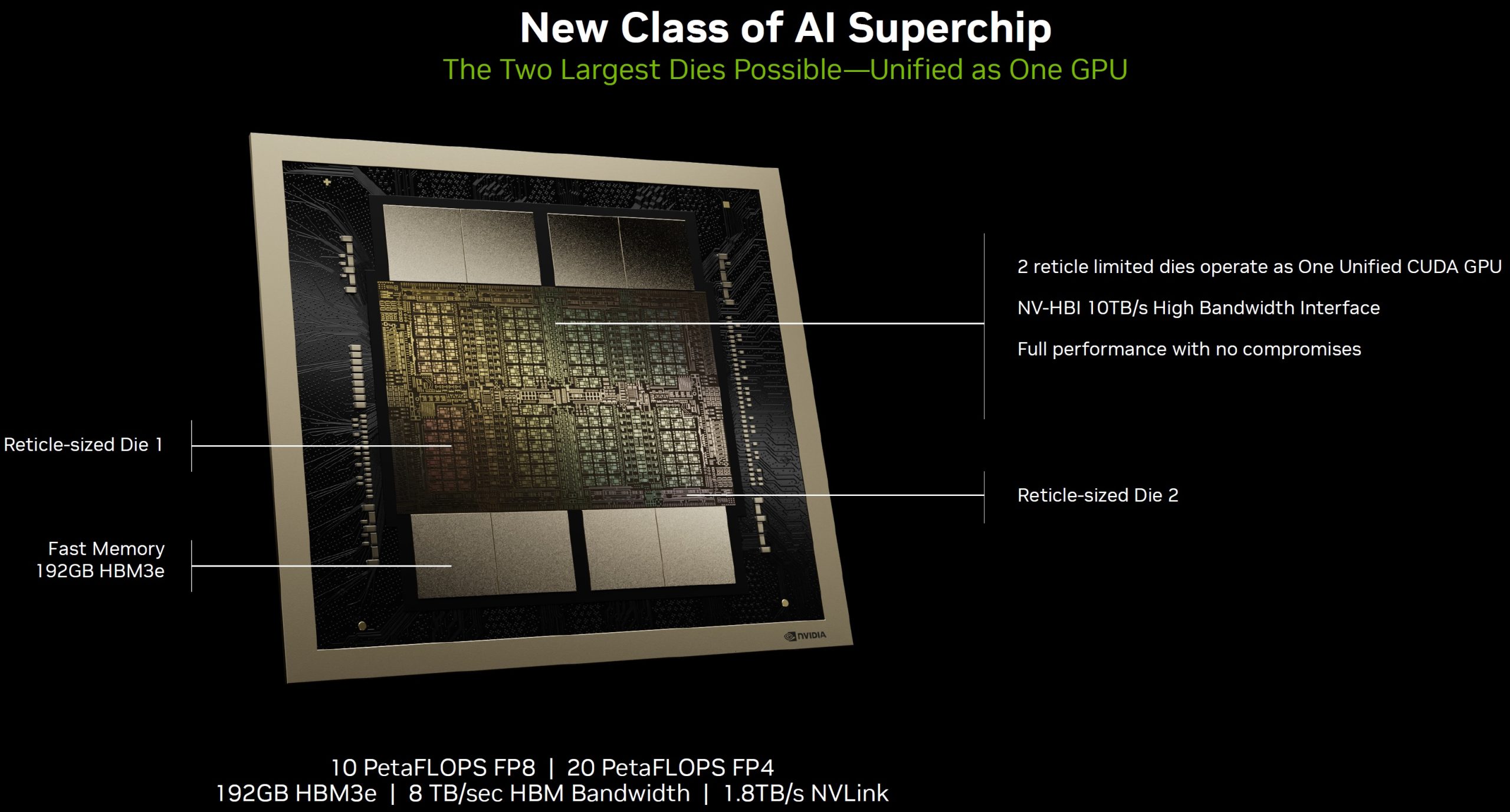

The first notable feature of the Nvidia Blackwell is its GPUs, which pack an impressive 208 billion transistors. These GPUs are manufactured using a custom-built 4NP TSMC process with two-reticle limit GPU dies connected by a 10 TB/second chip-to-chip link into a single, unified GPU. This design sets a new standard for processing power, catering to the increasingly complex demands of AI and computational workloads.

Another key aspect of the Nvidia Blackwell is the Second-Generation Transformer Engine, which is fuelled by new micro-tensor scaling support and advanced dynamic range management algorithms. This enables the architecture to support double the compute and model sizes with new 4-bit floating point AI inference capabilities, significantly enhancing AI processing efficiency. Additionally, the Fifth Generation NVLink delivers groundbreaking 1.8TB/s bidirectional throughput per GPU, ensuring seamless high-speed communication among up to 576 GPUs for the most complex LLMs. This feature is essential for accelerating performance for multitrillion-parameter and mixture-of-experts AI models, catering to the ever-growing demands of AI applications.

The inclusion of a dedicated RAS Engine is a testament to the reliability, availability, and serviceability focus of the Blackwell architecture. It maximizes system uptime and improves resiliency for massive-scale AI deployments, while integrating AI-based preventative maintenance to run diagnostics and forecast reliability issues thereby reducing operating costs and ensuring uninterrupted processing. As for security, the Nvidia Blackwell offers advanced confidential computing capabilities to protect AI models and customer data without compromising performance. This is particularly crucial for privacy-sensitive industries like healthcare and financial services, where data security and privacy are of utmost importance.

Lastly, the Blackwell architecture includes a dedicated decompression engine that supports the latest formats, accelerating database queries and data analytics. This significantly enhances data processing performance, demonstrating the architecture's versatility in handling a wide range of computational tasks.

NVIDIA GB200 NVL72:

The NVIDIA GB200 NVL72, powered by the newly announced NVIDIA GB200 Grace Blackwell Superchip, forms the core of this cutting-edge system. By seamlessly connecting two powerful NVIDIA Blackwell Tensor Core GPUs and the NVIDIA Grace CPU with the NVLink-Chip-to-Chip (C2C) interface, with 900 GB/s of bidirectional bandwidth, the NVL72 ensures coherent access to a unified memory space, simplifying programming and accommodating the substantial memory requirements of trillion-parameter LLMs, transformer models, large-scale simulations, and generative models for 3D data. A multi-node, liquid-cooled, rack-scale system, combines 36 Grace Blackwell Superchips, 72 Blackwell GPUs, and 36 Grace CPUs interconnected by fifth generation NVLink. This system provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads, while also reducing cost and energy consumption by up to 25x.

The introduction of fifth-generation NVLink in the NVIDIA GB200 NVL72 is a game-changer, enabling the connection of up to 576 GPUs in a single NVLink domain with over 1 PB/s total bandwidth and 240 TB of fast memory. With each NVLink switch tray delivering 144 NVLink ports at 100 GB, the system fully connects each of the 18 NVLink ports on every one of the 72 Blackwell GPUs, ushering in a new era of seamless high-speed communication for the most intricate large models, with a staggering 1.8 TB/s of bidirectional throughput per GPU, over 14 times the bandwidth of PCIe Gen5.

The GB200 NVL72, a multi-node, liquid-cooled, rack-scale system, combines 36 Grace Blackwell Superchips, 72 Blackwell GPUs, and 36 Grace CPUs interconnected by fifth generation NVLink. This system provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads, while also reducing cost and energy consumption by up to 25x.

To learn more, contact us today!

Not what you're looking for? Check out our archives for more content

Blog Archive

From Silicon to Cloud

Turnkey IT Solutions that scale to meet customers needs